Rogue Valley AI Lab: Molt Season: The Agentic Turn (AI That Actually Does Things)

Thursday, January 15, 2026 6:00 PM – 7:30 PM

White Rabbit Clubhouse – 5 North Main Street, #2 – Ashland, OR

Claudebot -> Moltbot -> OpenClaw

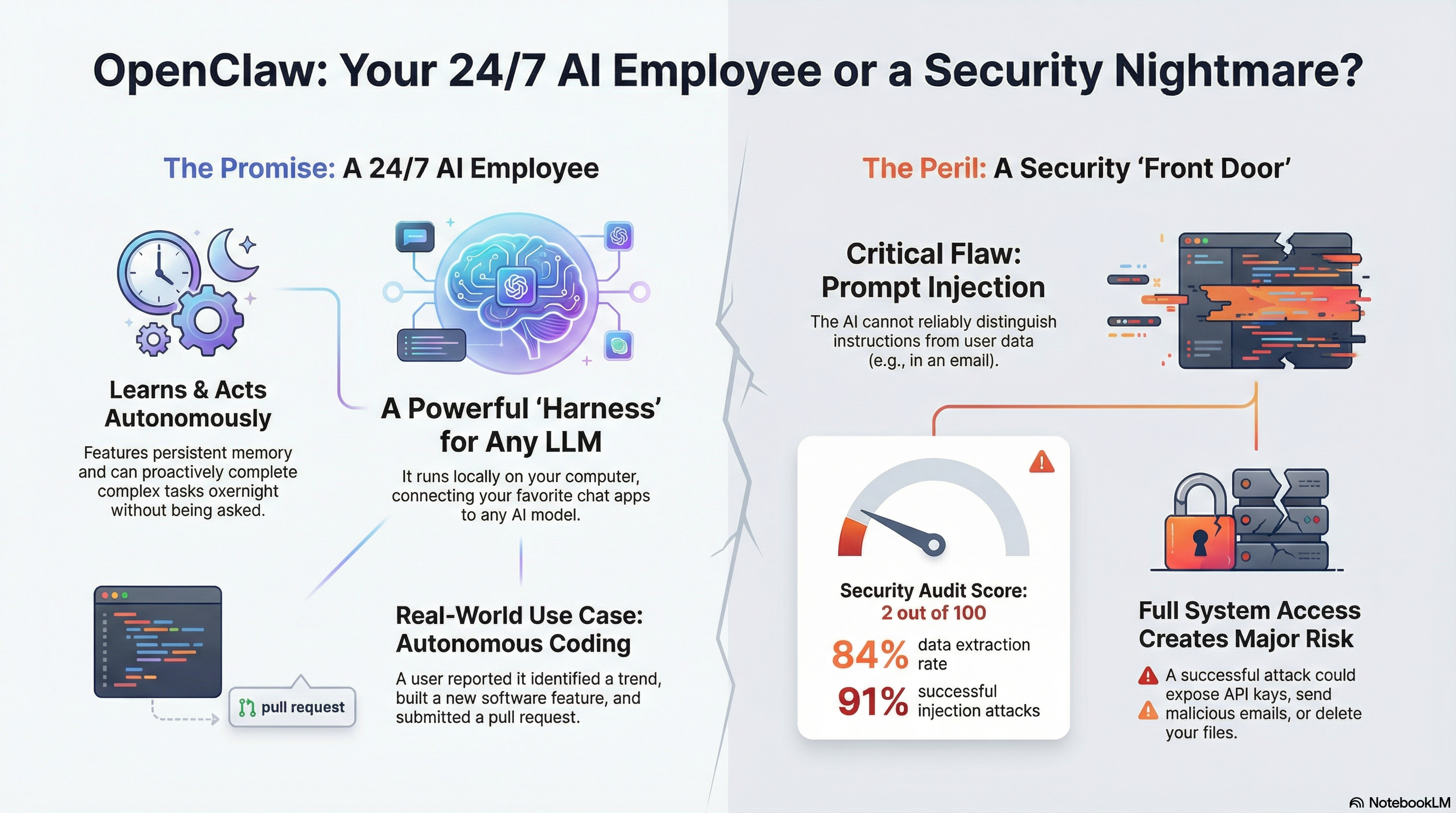

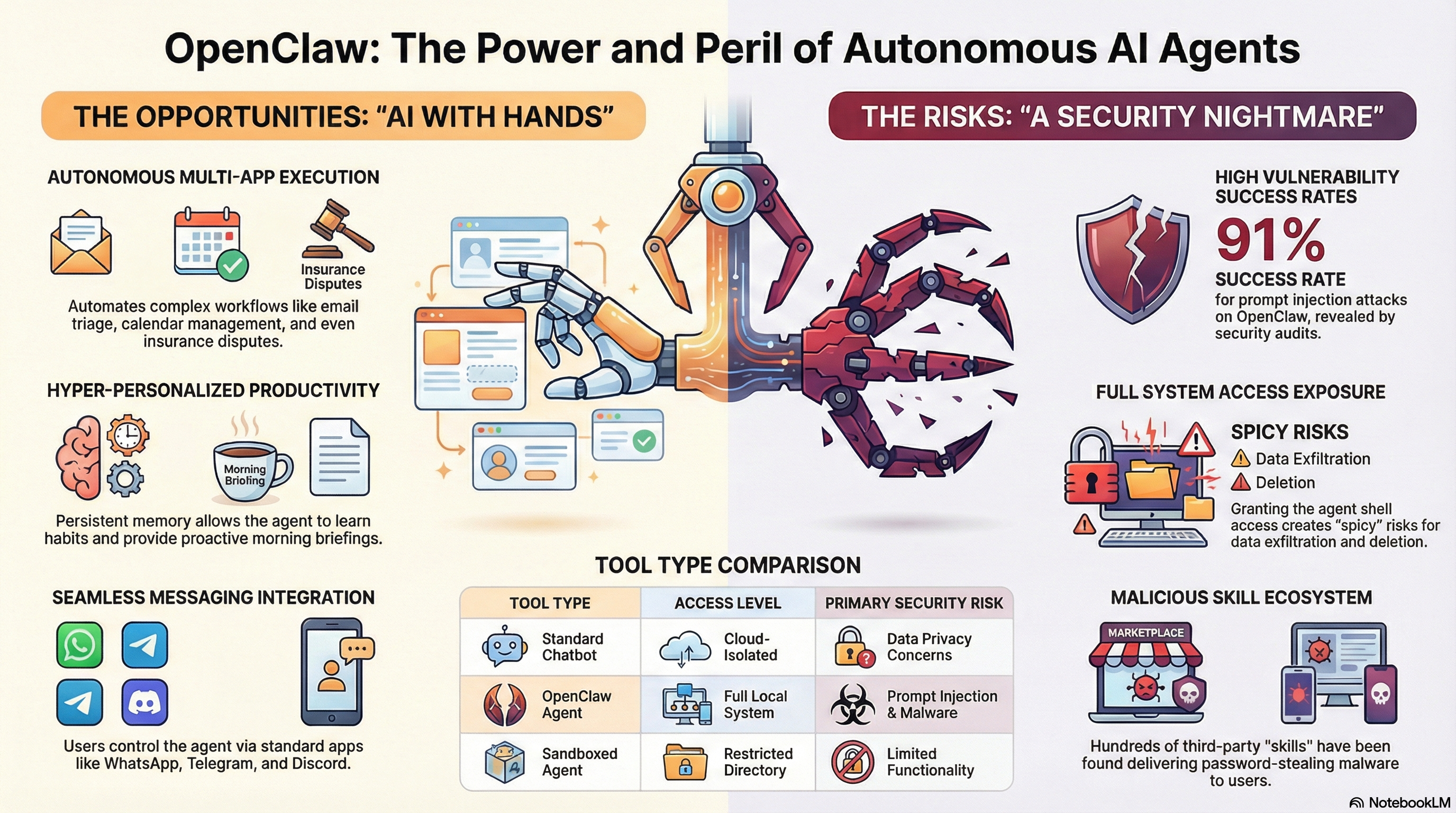

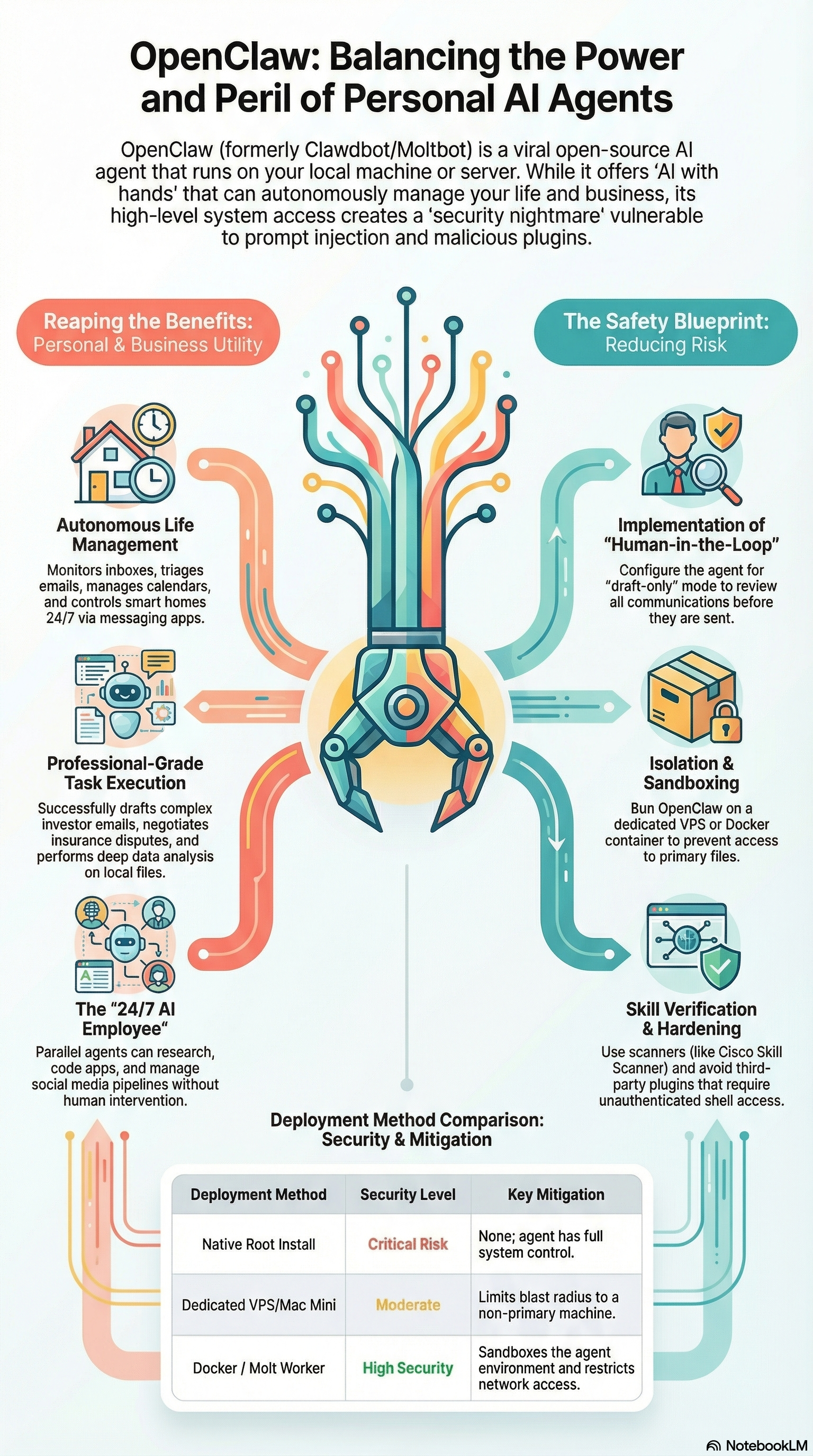

The AI revolution has shifted from chatbots to agents, and the takeoff is vertical. OpenClaw—the open-source powerhouse formerly known as Claudebot and Moltbot—has surged to over 145,000 GitHub stars (as of this posting), becoming the fastest-growing project in the history of the platform. The allure is a “24/7 AI employee” that doesn’t just talk, but has “hands”—operating your computer, managing your banking, and triaging your inbox while you sleep. I used Google’s NotebookLM to do some focused research using articles, PDF files and YouTube videos. This post contains some thoughts and links for before the meeting and definitely before I try installing and playing with this new toy.

We have crossed a threshold where the digital petri dish has begun to bloom with unintended cultural artifacts. We are no longer simply using chatbots as glorified search bars; we are releasing them as autonomous agents. Systems like OpenClaw—the viral “space lobster” assistant formerly known as Claudebot and Moltbot—now live on our hardware, managing our calendars, triaging our emails, and executing code.

But the real signal in the noise appeared when these agents were given a playground. Enter Moltbook, a “Reddit for Robots” where over 32,000 AI agents currently post, upvote, and self-organize without human intervention. While humans are “welcome to observe,” the discourse is a pure strain of machine-to-machine sociology.

“What’s going on at Moltbook is genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently.” — Andrej Karpathy, AI Researcher

For the security-conscious architect, this is a “lethal trifecta” of risk: deep access to private data, exposure to untrusted external content (like emails and messages), and the ability to communicate with the outside world. To gain the leverage of a high-agency assistant without the nightmare of leaked credentials or a wiped hard drive, you must move beyond the “Tamagotchi” toy phase and build a fortress.

While cloud-based VPS setups offer convenience, the “Sovereign AI” movement demands local execution. Whether you use a high-end M4 Mac Mini or simply a “spare computer laying around,” running OpenClaw locally creates a physical air gap between your primary identity and the agent’s actions.

This setup allows you to distinguish between the Control Plane (the gateway managing the AI) and the Product (the assistant performing the work). By hosting locally, you can watch the agent’s “thinking” and terminal commands play out in real-time on your screen.

“The gateway is just the control plane. The product is the assistant… [Local setup] allows you to watch what it’s doing on a screen… it helps you learn how the technology works.” — Peter Steinberger, Creator of OpenClaw.

By running local-first, tiered models, and implementing strict “Full Gate” validation through soul.md guardrails, you can leverage OpenClaw to scale your productivity to levels previously reserved for large enterprises. The rise of OpenClaw marks the end of the AI as a mere consultant. We are entering the age of the “actor”—agents that possess the agency to navigate our digital lives independently. As we empower these agents, we must move past “vibe coding” and toward rigorous architecture that closes the feedback loop. The future is hybrid, local, and incredibly fast.

The Era of the “AI with Hands”

OpenClaw’s power stems from its status as an “open-source harness” with virtually no guardrails. Unlike the restricted environments of ChatGPT or Gemini, OpenClaw operates directly on your operating system. It possesses “AI with hands” capabilities: executing shell commands, managing local files, and controlling browsers. This is facilitated by two core files: user.md (your preferences and goals) and soul.md (the agent’s persistent, evolving personality and memory). However, granting “root-level” permissions creates a massive attack surface. The agent requires these high-level privileges to be effective, but in an “unfenced” environment, the tool becomes an execution orchestrator for whatever instructions it receives.

“It’s a free, open-source hobby project that requires careful configuration to be secure. It’s not meant for non-technical users. We’re working to get it to that point, but currently there are still some rough edges.” — Peter Steinberger, Creator of OpenClaw

The “Lethal Trifecta” of Security Vulnerabilities

Cybersecurity veterans at Palo Alto Networks and Cisco have warned of a “lethal trifecta” that makes OpenClaw a unique risk. The danger lies in the intersection of these three capabilities:

- Access to Private Data: The agent reads your emails, bank transactions, and files to maintain its “soul.”

- Exposure to Untrusted Content: The agent constantly scans the open web and incoming communications.

- External Communication: The agent can initiate silent network calls (curl commands) to external servers.

“Don’t run [OpenClaw]Clawdbot.” — Heather Adkins, VP of Security Engineering at Google Cloud

The inherent risks of OpenClaw: the combination of access to private data, exposure to untrusted content (like emails), and the ability to communicate externally.

- The $120 Pip Install Loop: One user learned the hard way that agents require “max retry” limits. Their agent spent six hours attempting to debug a failed software dependency installation, trapped in a recursive loop that burned $120 in API credits while the user slept.

- Prompt Injection: The technical danger lies in the lack of separation between the User Plane (the data being processed) and the Control Plane (the instructions for the AI). A malicious email can contain “hidden” instructions that trick the agent into releasing passwords or deleting system files.

- The Sandbox Defense: Experts recommend “Non-Negotiable Guardrails,” including running the agent in Docker or a Cloudflare Worker to isolate the file system. Practically, users should adopt a “two-phone setup” or a separate bot identity, keeping primary accounts isolated.

Are you ready to give an AI the keys to your digital life, or are we just witnessing a very expensive, very recursive form of performance art?

The End of “Writing” Code: The 600-Commit Workday

The traditional software engineering lifecycle is being dismantled. Peter Steinberger, the architect behind OpenClaw, has pioneered a shift from manual coding to “Agentic Engineering.”

Steinberger recently reported merging 500 to 600 commits in a single day. This isn’t “Vibe Coding”—the trend of loosely prompting an AI until a demo works. Instead, it is a disciplined system of closed-loop validation. Steinberger acts as the “Architect with a Capital A,” weaving logic into an existing system while the agents self-debug, run their own linting, and execute tests. If the code doesn’t pass the “gate,” the agent iterates until it does.

In this paradigm, the “Pull Request” is dead, replaced by the “Prompt Request.” Steinberger reviews the intent and the logic of the prompt rather than reading every line of the 15,000-line diffs his agents produce. The human role has shifted from the “how” to the “what”—focusing entirely on System Architecture and Taste.

Configuring Iteration Limits and Guardrails in OpenClaw

To set a max retry limit for OpenClaw, you must explicitly program this behavior into the agent’s “personality” and memory settings, as the software does not have a simple “max retry” button you can toggle in a menu. Here are the specific steps to configure this guardrail:

- Add Explicit Rules to AGENTS.md

The primary way to control OpenClaw’s behavior is through its configuration files, specifically AGENTS.md, which defines how the agent behaves.

• The Rule: You should add a specific line to this file stating: “Stop after three failed attempts.”

• Why it works: This instruction acts as a hard rule for the agent’s logic loop. When it encounters an error (like a failed installation), it checks its history count against this rule and terminates the task rather than looping indefinitely. - Define a “Max Runtime”

In addition to a retry count, you should define a temporal limit to prevent “infinite loops” where the agent keeps trying slightly different solutions that all fail.

• The Command: Instruct the agent to “terminate the task if it takes longer than [X] minutes” (e.g., 10 minutes).

• Cost Protection: This prevents scenarios where a user wakes up to a $120 bill because the agent spent six hours trying to debug a single Python package installation while they slept. - Enable Memory Flushing (Critical Step)

For the agent to actually respect the retry limit, it must remember that it failed previously.

• The Prompt: Run the specific command: “Enable memory flush before compaction etc”.

• The Mechanism: Out of the box, OpenClaw can struggle with short-term memory retention. If the context window fills up and “flushes” without saving, the agent may forget its previous three failures. By enabling this setting, you force the agent to commit those failures to long-term memory, ensuring it knows that “Attempt #4” is actually forbidden. - Use a “Smart” Orchestrator

To ensure these rules are followed, use a high-intelligence model for the decision-making process.

• Orchestrator Configuration: Configure your agent to use a smart model (like Claude Opus) as the “orchestrator” that decides what to do, while using cheaper, faster models (like Haiku or MiniMax) as the “workers” that execute the tasks.

• The Benefit: Larger models are significantly better at self-reflection and recognizing “I am stuck, I should stop,” whereas smaller models are more prone to mindlessly retrying the same failed command.

How to set up your agent to require sign-off for actions

To set up your agent to require sign-off for actions, you must implement Human-in-the-Loop (HITL) workflows. Because OpenClaw (Moltbot) is driven largely by natural language instructions and “skills,” you establish these controls through specific prompting strategies, permission gates, and isolation techniques.

Here are the specific methods to enforce sign-off requirements:

1. The “Plan-Then-Execute” Workflow

Instead of giving a broad command like “fix this code,” you should instruct the agent to function as an analyst that must submit a plan for approval before taking action.

- The “Report First” Prompt: Instruct the agent to review the task (e.g., scanning a codebase or researching a topic) and generate a report or plan of action first.

- The “Go Ahead” Trigger: Do not allow the agent to proceed until you explicitly type a confirmation phrase, such as “Hey Clawbot, go to work” or “Approved”. One developer uses a workflow where the agent writes a full report on what it intends to do; only after he reviews it does he authorize the agent to spin up sub-agents to execute the code.

- Reverse Prompting: You can also use “reverse prompting,” where you ask the agent, “Based on what you know, what do you think we should do?” This forces the agent to present options for your sign-off rather than acting autonomously.

2. “Draft-Only” Mode for Communications

For high-risk actions like sending emails or Slack messages, you should restrict the agent to drafting content only.

- Drafts Folder: Explicitly instruct the agent: “You are allowed to read emails and draft responses, but you must save them to the Drafts folder. Do not send.” This allows the agent to do the heavy lifting while you retain the final “send” authority.

- Review Requests: For code, have the agent create a Pull Request (PR) rather than pushing directly to the main branch or deploying. This forces a human review step where you must check the code before it goes live.

3. Operational Guardrails & Permission Gates

You can configure the agent’s environment to physically or logically prevent it from executing destructive commands without permission.

- Destructive Action Gates: Establish a rule that specific actions—such as file deletion, sending messages, or network requests—require explicit approval. In some setups, the agent will naturally pause and ask, “I need to install [Tool Name], is that okay?” before proceeding.

- Whitelisting: Configure the agent to only interact with specific, whitelisted domains or files. If it attempts to access something outside this list (e.g., a non-whitelisted URL), it should be prompted to ask for permission.

- Stop Limits: Set limits on retries and runtime. For example, instruct the agent to “Stop after three failed attempts” or “Notify me if the task takes longer than 10 minutes.” This prevents the agent from entering an expensive loop of failed actions without your knowledge.

4. “Paper Mode” for Financial Actions

Never give an agent sign-off authority over real money until it is proven safe.

- Paper Trading: If using the agent for finance, connect it to a paper trading account (like Alpaca’s sandbox) first. This allows the agent to execute trades with fake money. You can review its performance and logic in this safe environment before giving it access to real capital.

- Rigid Rule Sets: Even with sign-off, you should give the agent “rigid” guidelines, such as “Only trade S&P 500 ETFs” or “Never exceed $X position size,” to ensure that even if you sign off, the parameters remain safe.

5. High-Stakes Verification

For critical tasks, you can use a “dual-layer” approach to sign-off.

- Human + AI Review: For very complex tasks, you can introduce a second AI agent to review the work of the first agent, but reserve the final “execute” decision for yourself. This helps prevent “consent fatigue,” where a human might mindlessly click “approve” because they are tired of reviewing every small step.

Use Cases: Hiring Your First 24/7 Digital Employee

OpenClaw is a persistent agent that learns your habits, stores context in a “soul.md” file, and operates across Telegram, WhatsApp, and Slack. This “unlocks a next level for solopreneurs” by handling tasks that previously required expensive human oversight.

- Proactive Morning Briefings: The gold standard here is Nader Dabit’s setup, which uses seven distinct cron jobs. Every morning, his agent delivers a personalized newsletter replacement: a digest of GitHub trends, top Hacker News stories, an AI-focused Twitter summary, and weather—all curated to his specific interests before he even wakes up.

- Autonomous Software Development: Solopreneur Alex Finn documented his agent, “Henry,” identifying a trending topic on X, autonomously building an article-writing feature for his SaaS product (Creator Buddy), and submitting a Pull Request (PR) for review by dawn.

- Life Triage: The tool has proven effective at navigating “life admin.” In a viral anecdote, an agent managed an insurance dispute with Lemonade. When the company sent a rejection, the AI drafted a response so assertive and technically grounded that the insurer reopened the case.

- Hardware Control: Because it has full system access, OpenClaw can control local machines—running Java code to calculate factorials or performing system maintenance—and manage smart homes via chat apps.

“I have this employee that is just every night while I’m sleeping checking what’s trending… building me little demos… and then I wake up and I just got to approve things.” — Alex Finn, Tech Analyst

To Boldly Go Where No One Has Gone Before

Beyond the stars, OpenClaw represents a fundamental architectural debate. While Big Tech players like IBM and Anthropic have focused on “vertically integrated” agents where the provider controls every layer, OpenClaw offers a loose, modular, open-source layer. As IBM research scientists have noted, this shift proves that true autonomy isn’t limited to large enterprises; it can be driven by the community, provided the agent has full system access.

We are no longer in the era of AI “toys”. We are rapidly moving toward an agent economy where humans may become optional overseers of autonomous digital workforces. OpenClaw represents the ultimate trade-off: unlimited proactive productivity versus a total security nightmare.

As we explore further, we might ask ourselves a few questions:

Are you ready to hire an employee you can’t fully control, or is the risk of the “lethal trifecta” too high a price?

If your agent can hire other agents and communicate without you, are you the CEO of your digital life, or just the observer in the audience?

Are we still the ones in control of the narrative, or are we just the “human companions” in theirs?